Introduction: From Isolated Solutions to Scalable Systems

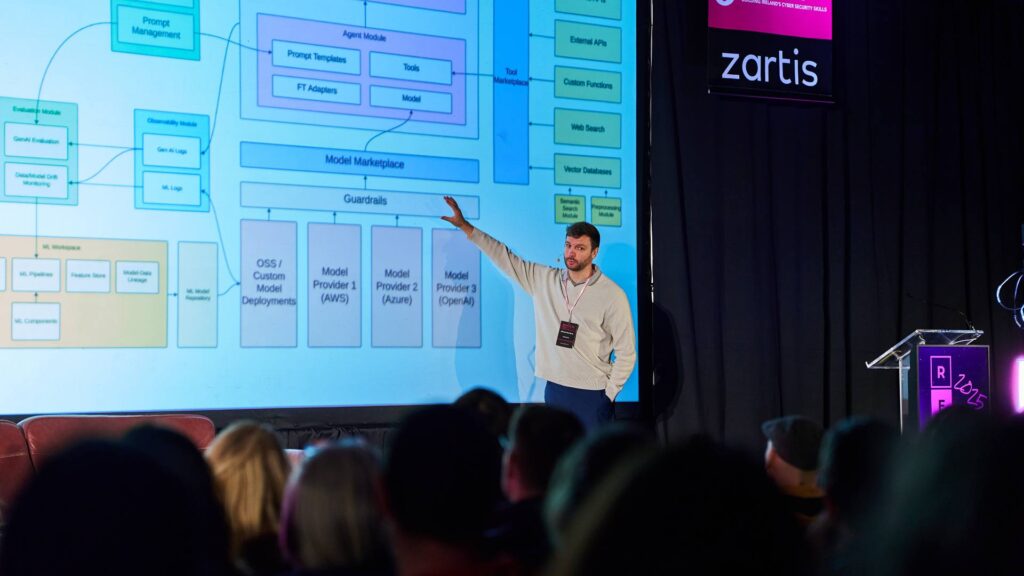

The public narrative around AI often centers on chatbots and conversational interfaces—tools that answer questions or summarize text. However, building enterprise-grade AI is not about a single solution; it’s about creating a scalable, secure, and flexible platform capable of supporting hundreds of AI-driven applications. True success demands a shift from siloed experimentation to platform thinking, where abstraction, governance, and reuse enable sustainable growth.

The following six lessons reveal the core truths of building enterprise-scale AI systems—and why they often defy common assumptions.

Delivered by Olivier Mertens, Principal AI Architect at Workhuman during RebelCon 2025.

1. The Goal Isn’t to Build AI — It’s to Hide Its Complexity

The hallmark of a mature AI platform is abstraction. Rather than having every developer learn deep AI mechanics, a centralized system should expose easy-to-use, pre-vetted components. Developers “configure” agents rather than “build” them.

This “create once, deploy everywhere” model allows scarce AI experts to focus on developing robust, reusable modules, while business teams rapidly assemble solutions from these building blocks. The result: faster innovation, reduced risk, and consistent application of best practices across the enterprise.

2. Generative AI Is Just One Tool in the Toolbox

While LLMs dominate headlines, they are not the answer to every problem. Many business tasks—forecasting, classification, clustering, network analysis—are more efficiently handled by traditional ML models.

Specialized models can be 10–100x more efficient than using an LLM for a narrow task like text classification. The most effective enterprise platforms integrate multiple AI paradigms—choosing the right model for the right job—rather than defaulting to generative AI for everything.

3. Prompts Are Production Code

Prompts define the behavior, tone, and reliability of AI agents. Because small changes can yield large, unpredictable shifts, prompts must be treated with the same rigor as application code:

- Version-controlled

- Auditable

- Stored in structured repositories (e.g., JSON stores or Git)

This practice ensures predictable, repeatable agent behavior and supports proper debugging, governance, and accountability as systems evolve.

4. Marketplaces Drive Agility and Future-Proofing

Enterprises can avoid vendor lock-in and maintain agility through two key abstraction layers:

- Model Marketplace: Acts as a switching layer between the platform and various model providers (e.g., AWS Bedrock, Azure OpenAI). Developers can easily swap models without re-engineering integrations—keeping the organization aligned with the best models available.

- Tool Marketplace: Provides reusable, pre-secured connections to APIs, databases, and other internal systems. Standards like MCP (Model Context Protocol) enhance this approach by enabling standardized connections across hundreds of solutions.

Together, these marketplaces make it possible to upgrade or replace AI components without breaking existing applications, ensuring long-term flexibility and scalability.

5. Security and Compliance Must Be Built-In, Not Bolted-On

Enterprise AI introduces unique security and data protection risks, from prompt injection attacks to unintentional data leaks. Security cannot be left to individual developers—it must be centralized within the platform architecture.

Key mechanisms include:

- Guardrails (Content Filters): Automatically block unsafe prompts, prevent misuse, and enforce topic restrictions.

- Private Connections: Use private networking (e.g., AWS PrivateLink, Azure Private Endpoints) to prevent data from traversing the public internet.

Embedding these controls at the platform layer ensures consistent enforcement, reduces human error, and minimizes enterprise-wide exposure.

6. Hallucinations and Bias Are Architectural Problems

Hallucinations and bias are not just flaws in models—they’re systemic challenges that must be mitigated through architecture and process.

- Hallucination Control: Implement “groundedness checks” that verify if responses are based on provided data. If unsupported, the system should suppress the answer—favoring “no response” over misinformation.

- Bias Mitigation: Use automated testing suites and red teaming (up to 11,000 simulated attacks) to detect demographic and systemic biases before deployment. Bias testing becomes a continuous, automated part of the development lifecycle.

Conclusion: The AI Platform as a Living Ecosystem

Building enterprise AI is not about creating a chatbot or deploying an LLM—it’s about constructing a living ecosystem where scalability, governance, and flexibility coexist.

By abstracting complexity, integrating diverse AI tools, enforcing security by design, and treating prompts and models as governed assets, organizations can transform AI from a novelty into a core operational capability.

The question for leaders is no longer whether they can build an AI solution—but whether they are building the foundation for an organization that can sustain hundreds of them.